Setup Your Own Local Coding Copilot in VSCode Using Ollama and Continue

The open-source Ollama tool allows you to run large language models locally and it can also be used to setup a coding copilot within the Visual Studio Code IDE. In this post, we will explore how to create your own locally running coding copilot at no cost, while also ensuring your data remains private.

The concept of an integrated coding assistant within a code editor is not new. Most IDEs already feature an IntelliSense popup that leverages static code analysis to offer code suggestions and completion capabilities. However, with the rise of generative AI, this idea has been significantly advanced by using Large Language Models (LLMs) for code generation. Beyond offering code suggestions, LLMs can comprehend entire source codebases and generate complete files with functional code.

GitHub Copilot, powered by OpenAI’s Codex model, is a widely popular, paid coding assistant that can significantly boost developer productivity. However, if you prefer not to spend money or have concerns about data privacy, you can opt for an open-source alternative using Ollama and Visual Studio Code.

Setup Ollama

Download and install Ollama on your PC -

- Download for Mac

- Download for Windows (Preview)

- For linux, run

curl -fsSL https://ollama.com/install.sh | sh

Ollama Models Library offers many LLMs suitable for code generation but we’ll select DeepSeek Coder 6.7B model as our copilot. Run the below command to download it -

ollama pull deepseek-coder:6.7b

Setup VSCode

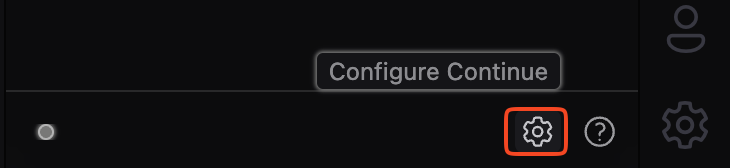

- Install the Continue VSCode Extension.

- Click on the gear icon in the bottom right corner of Continue to open

config.jsonand add below code -

{

"models": [

{

"title": "DeepSeek Coder 6.7b",

"provider": "ollama",

"model": "deepseek-coder:6.7b"

}

],

"tabAutocompleteModel": {

"title": "DeepSeek Coder 6.7b",

"provider": "ollama",

"model": "deepseek-coder:6.7b"

},

"embeddingsProvider": {

"provider": "ollama",

"model": "nomic-embed-text"

}

}

- Run

ollama pull nomic-embed-textto download the embedding model.

Copilot is ready!

Thats it! Your local coding copilot is now ready to take off.

Thanks for stopping by! I'll catch you in the next post.

Keep learning, stay curious, and keep coding!